SQL Relay Configuration Guide

- Quick Start

- Basic Configuration

- Modules

- Database Configuration

- Oracle

- Microsoft SQL Server (via ODBC)

- Microsoft SQL Server (via FreeTDS)

- SAP/Sybase (via the native SAP/Sybase library)

- SAP/Sybase (via FreeTDS)

- IBM DB2

- Informix

- MySQL/MariaDB

- PostgreSQL

- Firebird

- SQLite

- Teradata (via ODBC)

- Exasol (via ODBC)

- Apache Hive (via ODBC)

- Apache Impala (via ODBC)

- Amazon Redshift (via ODBC)

- Amazon Redshift (via PostgreSQL)

- Amazon Athena (via ODBC)

- Generic ODBC

- Database Connection Count

- Database Cursor Count

- Dynamic Scaling

- Listener Configuration

- Multiple Instances

- Starting Instances Automatically

- Client Protocol Options

- SQLRClient Protocol

- MySQL Protocol

- Basic Configuration

- Listener Options

- Authentication/Encryption Options

- Foreign Backend

- Special-Purpose Options

- Limitations

- PostgreSQL Protocol

- Multiple Protocols

- High Availability

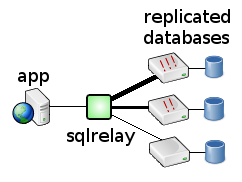

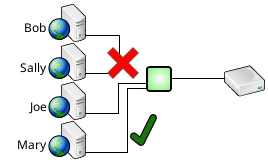

- Load Balancing and Failover With Replicated Databases or Database Clusters

- Already-Load Balanced Databases

- Master-Slave Query Routing

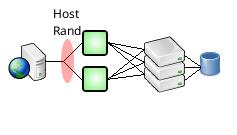

- Front-End Load Balancing and Failover

- Security Features

- Front-End Encryption and Secure Authentication

- Back-End Encryption and Secure Authentication

- Run-As User and Group

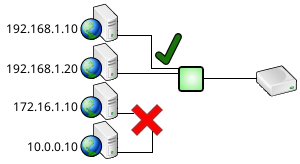

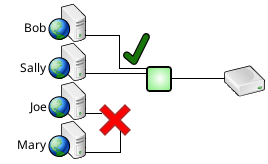

- IP Filtering

- Password Files

- Password Encryption

- Connection Schedules

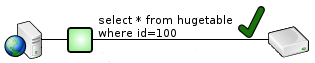

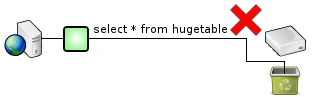

- Query Filtering

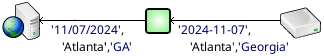

- Data Translation

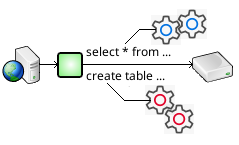

- Query Parsing

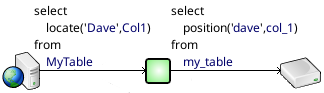

- Query Translation

- Bind Variable Translation

- Result Set Header Translation

- Result Set Translation

- Result Set Row Translation

- Result Set Row Block Translation

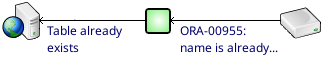

- Error Translation

- Module Data

- Query Directives

- Query and Session Routing

- Custom Queries

- Triggers

- Logging

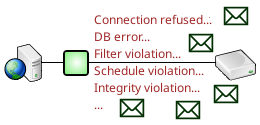

- Notifications

- Session-Queries

- Alternative Configuration File Options

- Advanced Configuration

Quick Start

In order to run, the SQL Relay server requires a configuration file. However, When SQL Relay is first installed, no such configuration file exists. You must create one in the appropriate location. This location depends on the platform and on how you installed SQL Relay.

- Unix and Linux

- Built from source - /usr/local/firstworks/etc/sqlrelay.conf (unless you specified a non-standard --prefix or --sysconfdir during the build)

- RPM package - /etc/sqlrelay.conf

- FreeBSD package - /usr/local/etc/sqlrelay.conf

- NetBSD package - /usr/pkg/etc/sqlrelay.conf

- OpenBSD package - /usr/local/etc/sqlrelay.conf

- Windows

- Built from source - C:\Program Files\Firstworks\etc\sqlrelay.conf

- Windows Installer package - C:\Program Files\Firstworks\etc\sqlrelay.conf (unless you specified a non-standard installation folder)

The most minimal sqlrelay.conf would be something like:

<?xml version="1.0"?>

<instances>

<instance id="example">

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

This configuration defines an instance of SQL Relay named example that opens and maintains a pool of 5 persistent connections to the ora1 instance of an Oracle database using scott/tiger credentials.

The instance can be started using:

sqlr-start -id example

( NOTE: When installed from packages, SQL Relay may have to be started and stopped as root.)

and can be accessed from the local machine using:

sqlrsh -host localhost -user scott -password tiger

( NOTE: By default, the user and password used to access SQL Relay are the same as the user and password that SQL Relay is configured to use to access the database. That is, they are the values of the user and password parameters of the string attribute of the connection tag. )

By default, SQL Relay listens on all available network interfaces, and can be accessed remotely by hostname. For example, if the server running SQL Relay is named sqlrserver then it can be accessed from another system using:

sqlrsh -host sqlrserver -user scott -password tiger

The instance can be stopped using:

sqlr-stop -id example

All running instances of SQL Relay can be stopped using:

sqlr-stop

(without the -id argument)

Basic Configuration

The example above may be sufficient for many use cases, but SQL Relay has many configuration options. For a production deployment, you will alomst certainly want to configure it further.

Modules

The SQL Relay server is highly modular. Configuring it largely consists of telling it which modules to load, and which options to use for each module. There are database connection modules, protocol modules, authentication modules, modules related to security, data translation modules, logging and notification modules, and others.

The module that you'll most likely need to configure first is a database connection module.

Database Configuration

By default, SQL Relay assumes that it's connecting to an Oracle database, but database connection modules for many other databases are provided.

The dbase attribute of the instance tag specifies which database connection module to load.

The connect string options (options in the string attribute of the connection tag) specify which parameters to use when connecting to the database. Connect string options are different for each database.

The connections tag also supports a debug attribute. If debug="yes" is configured in the connections tag (not in an individual connection tag) then debugging information about the connection is written to the destination of the debug logger module (if one is defined, see the debug logger module), or otherwise, to standard output. If debug="no" is configured, or if the debug attribute is omitted, then no debugging information is written.

Examples, illustrating how to connect to specific databases, follow.

Oracle

In this example, SQL Relay is configured to connect to an Oracle database. The dbase attribute defaults to "oracle", so the dbase attribute may be omitted when connecting to an Oracle database. It is just set here for illustrative purposes.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="oracle">

<connections>

<connection string="user=scott;password=tiger;oracle_home=/u01/app/oracle/product/12.1.0;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

The oracle_home option refers to the base directory of an Oracle instance. On Windows platforms, it should be specified as a Windows-style path with doubled backslashes. For example:

C:\\Oracle\\ora12.1

The oracle_home option is often unnecessary though, as the ORACLE_HOME environment variable is usually set system-wide. Also, since Oracle 10g, the TNS_ADMIN environment variable is preferred to ORACLE_HOME, and the tns_admin option can be used to serve the same purpose. See the SQL Relay Configuration Reference for details on the tns_admin option.

The oracle_sid option refers to an entry in the tnsnames.ora file (usually $ORACLE_HOME/network/admin/tnsnames.ora or $TNS_ADMIN/tnsnames.ora) similar to:

ORCL =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = examplehost)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = ora1)

)

)

( NOTE: The tnsnames.ora file must be readable by the user that the instance of SQL Relay runs as.)

If you are using Oracle Instant Client, then it's likely that you don't have an ORACLE_HOME/TNS_ADMIN, or a tnsnames.ora file. In that case, the oracle_sid can be set directly to a tnsnames-style expression and the oracle_home/tns_admin option can be omitted.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="oracle">

<connections>

<connection string="user=scott;password=tiger;oracle_sid=(DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = examplehost)(PORT = 1521)) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = ora1)))"/>

</connections>

</instance>

</instances>

See the SQL Relay Configuration Reference for other valid Oracle connect string options.

See Getting Started With Oracle for general assistance installing and configuring an Oracle database.

Microsoft SQL Server (via ODBC)

On Windows platforms, ODBC can be used to access a Microsoft SQL Server database. There is also a Microsoft-provided ODBC driver for some versions of Linux.

In this example, SQL Relay is configured to connect to a Microsoft SQL Server database via ODBC.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="odbc">

<connections>

<connection string="dsn=odbcdsn;user=odbcuser;password=odbcpassword"/>

</connections>

</instance>

</instances>

The dsn option in the string attribute of the connection tag refers to an ODBC DSN (Data Source Name).

On Windows platforms, the DSN is an entry in the Windows Registry, created by the ODBC Data Source Administrator (look for "Set up ODBC data sources" in the Control Panel or just run odbcad32.exe).

On Linux and Unix platforms, the DSN is an entry in the odbc.ini file (usually /etc/odbc.ini).

[exampledsn] Description=SQL Server Driver=ODBC Driver 11 for SQL Server Server=examplehost Port=1433 Database=

The Driver parameter refers to an entry in the odbcinst.ini file (usually /etc/odbcinst.ini) which identifies driver files:

[ODBC Driver 11 for SQL Server] Description=Microsoft ODBC Driver 11 for SQL Server Driver=/opt/microsoft/msodbcsql/lib64/libmsodbcsql-11.0.so.2270.0 Threading=1 UsageCount=1

( NOTE: The odbc.ini and odbcinst.ini files must be readable by the user that the instance of SQL Relay runs as.)

See the SQL Relay Configuration Reference for other valid ODBC connect string options.

See Getting Started With ODBC (on a non-MS platform) for general assistance installing and configuring ODBC on a non-MS platform.

Microsoft SQL Server (via FreeTDS)

On Windows platforms, ODBC can be used to access a Microsoft SQL Server database. There is also a Microsoft-provided ODBC driver for some versions of Linux.

However, on Linux and Unix platforms, access to Microsoft SQL Server databases is also available via FreeTDS.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="freetds">

<connections>

<connection string="server=FREETDSSERVER;user=freetdsuser;password=freetdspassword;db=freetdsdb"/>

</connections>

</instance>

</instances>

The server option refers to an entry in the freetds.conf file (usually /etc/freetds.conf or /usr/local/etc/freetds.conf) which identifies the database server, similar to:

[FREETDSSERVER] host = examplehost port = 1433 tds version = 7.0 client charset = UTF-8

( NOTE: The freetds.conf file must be readable by the user that the instance of SQL Relay runs as.)

See the SQL Relay Configuration Reference for other valid FreeTDS connect string options.

SAP/Sybase (via the native SAP/Sybase library)

In this example, SQL Relay is configured to connect to a SAP/Sybase database via the native SAP/Sybase library.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="sap">

<connections>

<connection string="sybase=/opt/sap;lang=en_US;server=SAPSERVER;user=sapuser;password=sappassword;db=sapdb"/>

</connections>

</instance>

</instances>

The sybase option refers to the base directory of the SAP/Sybase software installation. On Windows platforms, it should be specified as a Windows-style path with doubled backslashes. For example:

C:\\SAP

The sybase option is often unnecessary though, as the SYBASE environment variable is usually set system-wide.

On Linux/Unix platforms, the server option refers to an entry in the interfaces (usually $SYBASE/interfaces) file which identifies the database server, similar to:

SAPSERVER master tcp ether examplehost 5000 query tcp ether examplehost 5000

( NOTE: The interfaces file must be readable by the user that the instance of SQL Relay runs as.)

On Windows platforms, the server option refers to a similar entry created in an opaque location with the Open Client Directory Services Editor (dsedit).

The lang option sets the language to a value that is known to be supported by Sybase. This may not be necessary on all platforms. See the FAQ for more info.

See the SQL Relay Configuration Reference for other valid SAP/Sybase connect string options.

See Getting Started With SAP/Sybase for general assistance installing and configuring an SAP/Sybase database.

SAP/Sybase (via FreeTDS)

The native SAP/Sybase library is available on Windows and on some Linux/Unix platforms.

However, on Linux and Unix platforms, access to SAP/Sybase databases is also available via FreeTDS.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="freetds">

<connections>

<connection string="server=FREETDSSERVER;user=freetdsuser;password=freetdspassword;db=freetdsdb"/>

</connections>

</instance>

</instances>

The server option refers to an entry in the freetds.conf file (usually /etc/freetds.conf or /usr/local/etc/freetds.conf) which identifies the database server, similar to:

[FREETDSSERVER] host = examplehost port = 5000 tds version = 5.0

( NOTE: The freetds.conf file must be readable by the user that the instance of SQL Relay runs as.)

See the SQL Relay Configuration Reference for other valid FreeTDS connect string options.

See Getting Started With SAP/Sybase for general assistance installing and configuring an SAP/Sybase database.

IBM DB2

In this example, SQL Relay is configured to connect to an IBM DB2 database.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="db2">

<connections>

<connection string="db=exampledb;user=db2inst1;password=db2inst1pass;connecttimeout=0"/>

</connections>

</instance>

</instances>

The db option refers to an entry in the local DB2 instance's database catalog. See Getting Started With IBM DB2 - Accessing Remote Instances for more information.

The connecttimeout=0 option tells SQL Relay not to time out when connecting to the database. DB2 instances can take a long time to log in to sometimes. The default timeout is often too short.

See the SQL Relay Configuration Reference for other valid IBM DB2 connect string options.

See Getting Started With IBM DB2 for general assistance installing and configuring an IBM DB2 database.

Informix

In this example, SQL Relay is configured to connect to an Informix database.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="informix">

<connections>

<connection string="informixdir=/opt/informix;servername=ol_informix1210;db=informixdb;user=informixuser;password=informixpassword"/>

</connections>

</instance>

</instances>

The informixdir option refers to the base directory of the Informix software installation. On Windows platforms, it should be specified as a Windows-style pathwith doubled backslashes. For example:

C:\\Program Files\\IBM Informix Software Bundle

The informixdir option is often unnecessary, as the INFORMIXDIR environment variable is usually set system-wide.

On Linux and Unix platforms, the servername option refers to an entry in the sqlhosts file (usually $INFORMIXDIR/etc/sqlhosts) which identifies the database server, similar to:

ol_informix1210 onsoctcp 192.168.123.59 ol_informix1210

The second ol_informix1210 in the sqlhosts file refers to an entry in /etc/services which identifies the port that the server is listening on, similar to:

ol_informix1210 29756/tcp

( NOTE: The sqlhosts and /etc/services files must be readable by the user that the instance of SQL Relay runs as.)

On Windows platforms, the servername option refers to a similar entry created in an opaque location with the Setnet32 program.

See the SQL Relay Configuration Reference for other valid Informix connect string options.

MySQL/MariaDB

In this example, SQL Relay is configured to connect to a MySQL/MariaDB database.

<?xml version="1.0"?> <instances> <instance id="example" dbase="mysql"> <connections> <connection string="user=mysqluser;password=mysqlpassword;db=mysqldb;host=mysqlhost"/> </connections> </instance> </instances>

The host and db options indicate that SQL Relay should connect to the database exampledb on the host examplehost.

See the SQL Relay Configuration Reference for other valid MySQL/MariaDB connect string options.

See Getting Started With MySQL for general assistance installing and configuring a MySQL/MariaDB database.

PostgreSQL

In this example, SQL Relay is configured to connect to a PostgreSQL database.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="postgresql">

<connections>

<connection string="user=postgresqluser;password=postgresqlpassword;host=postgresqlhost;db=postgresqldb"/>

</connections>

</instance>

</instances>

The host and db options indicate that SQL Relay should connect to the database exampledb on the host examplehost.

See the SQL Relay Configuration Reference for other valid PostgreSQL connect string options.

See Getting Started With PostgreSQL for general assistance installing and configuring a PostgreSQL database.

Firebird

In this example, SQL Relay is configured to connect to a Firebird database.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="firebird">

<connections>

<connection string="user=firebirduser;password=firebirdpassword;db=firebirdhost:/opt/firebird/firebirddb.gdb"/>

</connections>

</instance>

</instances>

The db option indicates that SQL Relay should connect to the database located at /opt/firebird/exampledb.gdb on the host examplehost.

Note that if the database is located on a Windows host, then the path segment of the db option should be specified as a Windows-style path with doubled backslashes. For example:

examplehost:C:\\Program Files\\Firebird\\Firebird_3_0\\exampledb.gdb

See the SQL Relay Configuration Reference for other valid Firebird connect string options.

See Getting Started With Firebird for general assistance installing and configuring a Firebird database.

SQLite

In this example, SQL Relay is configured to connect to a SQLite database.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="sqlite">

<connections>

<connection string="db=/var/sqlite/sqlitedb;user=sqliteuser;password=sqlitepassword"/>

</connections>

</instance>

</instances>

The db option indicates that SQL Relay should connect to the database /var/sqlite/exampledb.

Note that the database file (exampledb in this case) and the directory that its located in (/var/sqlite in this case) must both be readable and writable by the user that the instance of SQL Relay runs as.

Also note the user and password parameters in the connection string. SQLite doesn't require these for SQL Relay to access the database, but they are included to define a user and password for accessing SQL Relay itself.

SQLite also supports a high-performance in-memory mode where tables are maintained in memory and nothing is written to permanent storage. To use this mode with SQL Relay, set the db option to :memory:.

As each running instance of sqlr-connection will have its own separate in-memory database, you almost certainly want to limit the number of connections to 1.

<?xml version="1.0"?>

<instances>

<instance id="example" dbase="sqlite" connections="1">

<connections>

<connection string="db=:memory:;user=sqliteuser;password=sqlitepassword"/>

</connections>

</instance>

</instances>

Note that the entire in-memory database will be lost when SQL Relay is stopped. There is no way to preserve it. Such is the nature of pure in-memory databases.

See the SQL Relay Configuration Reference for other valid SQLite connect string options.

See Getting Started With SQLite for general assistance installing and configuring a SQLite database.

Teradata (via ODBC)

Teradata ODBC drivers are available for many platforms, including Windows and Linux.

In this example, SQL Relay is configured to connect to a Teradata database via ODBC.

<?xml version="1.0"?> <instances> <instance id="example" dbase="odbc"> <connections> <connection string="dsn=teradata;user=teradatauser;password=teradatapassword"/> </connections> </instance> <instances>

The dsn option in the string attribute of the connection tag refers to an ODBC DSN (Data Source Name).

On Windows platforms, the DSN is an entry in the Windows Registry, created by the ODBC Data Source Administrator (look for "Set up ODBC data sources" in the Control Panel or just run odbcad32.exe).

On Linux and Unix platforms, the DSN is an entry in the odbc.ini file (usually /etc/odbc.ini).

[teradata] Description=Teradata Database ODBC Driver 16.20 Driver=/opt/teradata/client/16.20/lib64/tdataodbc_sb64.so DBCName=teradatahost UID=testuser PWD=testpassword AccountString= CharacterSet=ASCII DatasourceDNSEntries= DateTimeFormat=IAA DefaultDatabase= DontUseHelpDatabase=0 DontUseTitles=1 EnableExtendedStmtInfo=1 EnableReadAhead=1 IgnoreODBCSearchPattern=0 LogErrorEvents=0 LoginTimeout=20 MaxRespSize=65536 MaxSingleLOBBytes=0 MaxTotalLOBBytesPerRow=0 MechanismName= NoScan=0 PrintOption=N retryOnEINTR=1 ReturnGeneratedKeys=N SessionMode=System Default SplOption=Y TABLEQUALIFIER=0 TCPNoDelay=1 TdmstPortNumber=1025 UPTMode=Not set USE2XAPPCUSTOMCATALOGMODE=0 UseDataEncryption=0 UseDateDataForTimeStampParams=0

( NOTE: The odbc.ini file must be readable by the user that the instance of SQL Relay runs as.)

See the SQL Relay Configuration Reference for other valid ODBC connect string options.

Exasol (via ODBC)

Exasol ODBC drivers are available for Windows and Linux.

In this example, SQL Relay is configured to connect to an Exasol database via ODBC.

<?xml version="1.0"?> <instances> <instance id="example" dbase="odbc"> <connections> <connection string="dsn=exasolution-uo2214lv2_64;user=sys;password=exasol"/> </connections> </instance> <instances>

The dsn option in the string attribute of the connection tag refers to an ODBC DSN (Data Source Name).

On Windows platforms, the DSN is an entry in the Windows Registry, created by the ODBC Data Source Administrator (look for "Set up ODBC data sources" in the Control Panel or just run odbcad32.exe).

On Linux and Unix platforms, the DSN is an entry in the odbc.ini file (usually /etc/odbc.ini).

[exasolution-uo2214lv2_64] DRIVER=/home/dmuse/software/EXASOL_ODBC-6.0.11/lib/linux/x86_64/libexaodbc-uo2214lv2.so EXAHOST=192.168.123.12:8563 EXAUID=sys EXAPWD=exasol

( NOTE: The odbc.ini file must be readable by the user that the instance of SQL Relay runs as.)

See the SQL Relay Configuration Reference for other valid ODBC connect string options.

Apache Hive (via ODBC)

Apache Hive is a data warehouse built on top of Apache Hadoop. Cloudera provides a Hive ODBC driver for Windows and Linux platforms which implements a SQL interface to Hive. This driver has some quirks.

The most significant quirk is that version 2.6.4.1004 (and probably other versions) for Linux ship with their own copy of libssl/libcrypto, version 1.1. These tend to conflict with the versions of libssl/libcrypto that SQL Relay itself is linked against, causing the driver to malfunction. The only known solution is to build a copy of Rudiments and SQL Relay from source, configuring rudiments with the --disable-ssl --disable-gss --disable-libcurl options, and use this copy to access Hive. Unfortunately, this has a side effect of disabling all SSL/TLS and Kerberos support in SQL Relay, as well as disabling the ability to load config files over https and ssh. Perhaps a future version of the Cloudera Hive ODBC driver for Linux will link against the system versions of libssl/libcrypto and eliminate this quirk.

In this example, SQL Relay is configured to connect to a Apache Hive data warehouse via ODBC.

<?xml version="1.0"?> <instances> <instance id="example" dbase="odbc"> <session> <start> <runquery> use hivedb </runquery> <runquery> select 1 </runquery> </start> </session> <connections> <connection string="dsn=hive;user=hiveuser;password=hivepassword;db=hivedb;overrideschema=hivedb"/> </connections> </instance> <instances>

The contents of the session tag work around another quirk. "hivedb" is specified in the db option of the string attribute of the connection tag, but this doesn't appear to be adequate to actually put you in that schema. Running the "use hivedb" query at the beginning of the session helps. But, it appears that the schema isn't actually selected until the first query is run. So, the "select 1" query immediately following the use query solves this.

The overrideschema option in the string attribute of the connection tag works around yet another quirk. The database tends to report the current schema as something other than "hivedb", but when running "show tables" or "describe" queries, the database wants "hivedb" to be passed in as the schema for the table names.

The dsn option in the string attribute of the connection tag refers to an ODBC DSN (Data Source Name).

On Windows platforms, the DSN is an entry in the Windows Registry, created by the ODBC Data Source Administrator (look for "Set up ODBC data sources" in the Control Panel or just run odbcad32.exe).

On Linux and Unix platforms, the DSN is an entry in the odbc.ini file (usually /etc/odbc.ini).

[hive] Driver=/opt/cloudera/hiveodbc/lib/64/libclouderahiveodbc64.so HiveServerType=2 Host=hiveserver Port=10000

( NOTE: The odbc.ini file must be readable by the user that the instance of SQL Relay runs as.)

See the SQL Relay Configuration Reference for other valid ODBC connect string options.

Apache Impala (via ODBC)

Apache Impala is a query engine for Apache Hadoop. Cloudera provides an Impala ODBC driver for Windows and Linux platforms. This driver has some quirks.

The most significant quirk is that version 2.5.20 (and probably other versions) for Linux ship linked against libsasl2.so.2. This library can be found on RedHat Enterprise 6 (or CentOS 6) but modern Linux systems have moved on to newer versions. To use the driver, it is necessary to get a copy of libsasl2.so.2.0.23 (or a similar version) from an old enough system, install it in an appropriate lib directory, and create libsasl2.so.2 as a symlink to it in that same directory.

In this example, SQL Relay is configured to connect to a Impala database via ODBC.

<?xml version="1.0"?> <instances> <instance id="example" dbase="odbc"> <session> <start> <runquery> use default </runquery> <runquery> select 1 </runquery> </start> </session> <connections> <connection string="dsn=impala;overrideschema=%;unicode=no"/> </connections> </instance> <instances>

The contents of the session tag work around a quirk. Normally the schema would be selected by setting the db option of the string attribute of the connection tag, but this doesn't work at all. Running the "use default" query at the beginning of the session helps. But, it appears that the schema isn't actually selected until the first query is run. So, the "select 1" query immediately following the use query solves this.

The overrideschema option in the string attribute of the connection tag works around yet another quirk. When running "show tables" or "describe" queries, the database needs "%" (the SQL wildcard) to be passed in as the schema for the table names.

The driver also doesn't support unicode, so the unicode=no option in the string attribute of the connection tag tells SQL Relay not to try.

Note that there are no user/password options in the string attribute of the connection tag. Impala supports several different authentication mechanisms, but by default, it allows unauthenticated access to the database (AuthMech=0). This can be configured in the DSN though, and if a user/password authentication mechansim is selected, then the standard user/password options can be included.

The dsn option in the string attribute of the connection tag refers to an ODBC DSN (Data Source Name).

On Windows platforms, the DSN is an entry in the Windows Registry, created by the ODBC Data Source Administrator (look for "Set up ODBC data sources" in the Control Panel or just run odbcad32.exe).

On Linux and Unix platforms, the DSN is an entry in the odbc.ini file (usually /etc/odbc.ini).

[impala] Description=Cloudera ODBC Driver for Impala (64-bit) DSN Driver=Cloudera ODBC Driver for Impala 64-bit HOST=impalaserver PORT=21050 Database=impaladb AuthMech=0 UID=default PWD= TSaslTransportBufSize=1000 RowsFetchedPerBlock=10000 SocketTimeout=0 StringColumnLength=32767 UseNativeQuery=0

The Driver parameter refers to an entry in the odbcinst.ini file (usually /etc/odbcinst.ini) which identifies driver files:

[Cloudera ODBC Driver for Impala 64-bit] Description=Cloudera ODBC Driver for Impala (64-bit) Driver=/opt/cloudera/impalaodbc/lib/64/libclouderaimpalaodbc64.so

( NOTE: The odbc.ini and odbcinst.ini files must be readable by the user that the instance of SQL Relay runs as.)

See the SQL Relay Configuration Reference for other valid ODBC connect string options.

Amazon Redshift (via ODBC)

Amazon Redshift is a cloud-hosted data warehouse service. Amazon provides a Redshift ODBC driver for Windows and Linux platforms. This driver (or perhaps Redshift itself) has some quirks.

In this example, SQL Relay is configured to connect to a Redshift database via ODBC.

<?xml version="1.0"?> <instances> <instance id="example" dbase="odbc" ignoreselectdatabase="yes"> <connections> <connection string="dsn=redshift;user=redshiftuser;password=redshiftpassword;overrideschema=public"/> </connections> </instance> <instances>

The ignoreselectdatabase="yes" attribute of the instance tag works around a quirk. It instructs SQL Relay to ignore "use database" queries, or other attempts to select the current database/schema. These options tend to put the connection outside of any database/schema, and unable to return to the desired database/schema.

The overrideschema option in the string attribute of the connection tag works around another quirk. When running "show tables" or "describe" queries, the database needs "public" to be passed in as the schema for the table names.

The dsn option in the string attribute of the connection tag refers to an ODBC DSN (Data Source Name).

On Windows platforms, the DSN is an entry in the Windows Registry, created by the ODBC Data Source Administrator (look for "Set up ODBC data sources" in the Control Panel or just run odbcad32.exe).

On Linux and Unix platforms, the DSN is an entry in the odbc.ini file (usually /etc/odbc.ini).

[redshift] Driver=Amazon Redshift (x64) Host=redshifthost Database=redshiftdb Username=redshiftuser Password=redshiftpassword

The Driver parameter refers to an entry in the odbcinst.ini file (usually /etc/odbcinst.ini) which identifies driver files:

[Amazon Redshift (x64)] Description=Amazon Redshift ODBC Driver (64-bit) Driver=/opt/amazon/redshiftodbc/lib/64/libamazonredshiftodbc64.so

( NOTE: The odbc.ini and odbcinst.ini files must be readable by the user that the instance of SQL Relay runs as.)

See the SQL Relay Configuration Reference for other valid ODBC connect string options.

Amazon Redshift (via PostgreSQL)

Amazon Redshift is a cloud-hosted data warehouse service. Since it is based on PostgreSQL 8, it is possible to connect to a Redshift instance using the PostgreSQL client library.

<?xml version="1.0"?> <instances> <instance id="example" dbase="postgresql" ignoreselectdatabase="yes"> <connections> <connection string="host=redshifthost;port=5439;user=redshiftuser;password=redshiftpassword;db=redshiftdb;typemangling=lookup;tablemangling=lookup"/> </connections> </instance> <instances>

The ignoreselectdatabase="yes" attribute of the instance tag works around a quirk. It instructs SQL Relay to ignore "use database" queries, or other attempts to select the current database/schema. These options tend to put the connection outside of any database/schema, and unable to return to the desired database/schema.

The port option in the string attribute of the connection tag instructs SQL Relay to connect to the Redshift default port of 5439 rather than the PostgreSQL default port of 5432.

The typemangling and tablemangling options in the string attribute of the connection tag instruct SQL Relay to return data type and table names rather than data type and table object ID's (the default for PostgreSQL)

See the SQL Relay Configuration Reference for other valid PostgreSQL connect string options.

Amazon Athena (via ODBC)

Amazon Athena is an SQL interface to data stored in Amazon S3. Simba provides an Athena ODBC driver for Windows and Linux platforms. This driver (or perhaps Athena itself) has some quirks.

In this example, SQL Relay is configured to connect to a Athena database via ODBC.

<?xml version="1.0"?> <instances> <instance id="example" dbase="odbc"> <connections> <connection string="dsn=athena;user=RDXDE23X56D9822FFGE3;password=uEEmF+RDexoXpqTD5MiP3421emYP2M+4Rqo6GHio;overrideschema=athenadb"/> </connections> </instance> <instances>

Note the odd user and password options in the string attribute of the connection tag.

Note also that the db option is missing from the string attribute of the connection tag. The schema must be set in the DSN, and setting it in the connection string doesn't override the one set in the DSN.

The overrideschema option in the string attribute of the connection tag works around a quirk. The database tends to report the current schema as something other than "athenadb", but when running "show tables" or "describe" queries, the database wants "athenadb" to be passed in as the schema for the table names.

The dsn option in the string attribute of the connection tag refers to an ODBC DSN (Data Source Name).

On Windows platforms, the DSN is an entry in the Windows Registry, created by the ODBC Data Source Administrator (look for "Set up ODBC data sources" in the Control Panel or just run odbcad32.exe).

On Linux and Unix platforms, the DSN is an entry in the odbc.ini file (usually /etc/odbc.ini).

[athena] Description=Simba Athena ODBC Driver (64-bit) DSN Driver=/opt/simba/athenaodbc/lib/64/libathenaodbc_sb64.so AwsRegion=us-east-1 Schema=athenadb S3OutputLocation=s3://athenadata/ UID=RDXDE23X56D9822FFGE3 PWD=uEEmF+RDexoXpqTD5MiP3421emYP2M+4Rqo6GHio

The Driver parameter refers to an entry in the odbcinst.ini file (usually /etc/odbcinst.ini) which identifies driver files:

[Simba Athena ODBC Driver 64-bit] Description=Simba Athena ODBC Driver (64-bit) Driver=/opt/simba/athenaodbc/lib/64/libathenaodbc_sb64.so

( NOTE: The odbc.ini and odbcinst.ini files must be readable by the user that the instance of SQL Relay runs as.)

See the SQL Relay Configuration Reference for other valid ODBC connect string options.

Generic ODBC

ODBC can be used to access many databases for which SQL Relay has no native support.

Accessing a database via an ODBC driver usually involves:

- Installing the driver

- Creating a DSN

- On Windows this is done via a control panel

- On Linux/Unix it is done by adding entries to the odbc.ini and sometimes odbcinst.ini files

- Creating an instance of SQL Relay with dbase="odbc" which refers to the DSN, optionally specifying the user and password

See the examples above for more details. It should be possible to adapt one of them to the ODBC driver that you would like to use.

Note that many ODBC drivers have quirks. Many of the examples above demonstrate ways of working around some of these quirks.

Database Connection Count

By default, SQL Relay opens and maintains a pool of 5 persistent database connections, but the number of connections can be configured using the connections attribute.

<?xml version="1.0"?>

<instances>

<instance id="example" connections="10">

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

The number of connections determines how many client applications can access the database simultaneously. In this example, up to 10, assuming each client only needs one connection. Additional clients would be queued and would have to wait for one of the first 10 to disconnect before being able to access the database.

( NOTE: Any number of connections may be configured, up to an "absolute max connections" limit defined at compile-time, which defaults to 4096. To find the limit on your system, run:

The command above also returns the "shmmax requirement" for the configuration. "shmmax" refers to the maximum size of a single shared memory segment, a tunable kernel parameter on most systems. The default shmmax requirement is about 5mb. On modern systems, shmmax defaults to at least 32mb, but on older systems it commonly defaulted to 512k. In any case, if the shmmax requirement exceeds the value of your system's shmmax parameter, then you will have to reconfigure the parameter before SQL Relay will start successfully. This may be done at runtime on most modern systems, but on older systems you may have to reconfigure and rebuild the kernel, and reboot.)sqlr-start -abs-max-connections

For Performance

In a performance-oriented configuration, a good rule of thumb is to open as many connections as you can. That number is usually environment-specific, and dictated by database, system and network resources.

For Throttling

If you intend to throttle database access to a particular application, then you may intentionally configure a small number of connections.

Database Cursor Count

Database cursors are used to execute queries and step through result sets. Most applications only need to use one cursor at a time. Some apps require more though, either because they run nested queries, or sometimes because they just don't properly free them.

SQL Relay maintains persistent cursors as well as connections. By default, each connection opens one cursor, but the number of cursors opened by each connection can be configured using the cursors attribute.

<?xml version="1.0"?>

<instances>

<instance id="example" connections="10" cursors="2">

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

Any number of cursors can be opened. A good rule of thumb is to open as few as possible, but as many as you know that your application will need.

( NOTE: The documentation above says that by default, each connection opens one cursor, and this is true, but it would be more accurate to say that by default each connection opens one cursor, but will open additional cursors on-demand, up to 5. This is because it is common for an app to run at least one level of nested queries. For example, it is common to run a select and then run an insert, update, or delete for each row in the result set. Unfortunately, it is also not uncommon for apps to just manage cursors poorly. So, SQL Relay's default configuration offers a bit of flexibility to accommodate these circumstances. See the next section on Dynamic Scaling for more information about configuring connections and cursors to scale up and down on-demand.)

Dynamic Scaling

Both connections and cursors can be configured to scale dynamically - open on demand and then die off when no longer needed. This feature is useful if you have spikes in traffic during certain times of day or if your application has a few modules that occasionally need more cursors than usual.

The maxconnections and maxcursors attribute define the upper bounds.

<?xml version="1.0"?>

<instances>

<instance id="example" connections="10" maxconnections="20" cursors="2" maxcursors="10">

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

In this example, 10 connections will be started initially but more will be be started on-demand, up to 20. Each of the newly spawned connections will die off if they are inactive for longer than 1 minute.

In this example, each connection will initially open 2 cursors but more will be opened on-demand, up to 10. Each newly opened cursor will be closed as soon as it is no longer needed.

Other attributes that control dynamic scaling behavior include:

- maxqueuelength

- growby

- ttl

- cursors_growby

See the SQL Relay Configuration Reference for more information on these attributes.

Listener Configuration

By default, SQL Relay listens for client connections on port 9000, on all available network interfaces.

It can be configured to listen on a different port though...

<?xml version="1.0"?>

<instances>

<instance id="example">

<listeners>

<listener protocol="sqlrclient" port="9001"/>

</listeners>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

...and accessed using:

sqlrsh -host sqlrserver -port 9001 -user scott -password tiger

It can also be configured to listen on a unix socket...

<?xml version="1.0"?>

<instances>

<instance id="example">

<listeners>

<listener protocol="sqlrclient" socket="/tmp/example.socket">

</listener>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

( NOTE: The user that SQL Relay runs as must be able to read and write to the path of the socket.)

...and accessed from the local server using:

sqlrsh -socket /tmp/example.socket -user scott -password tiger

If the server has multiple network interfaces, SQL Relay can also be configured to listen on specific IP addresses.

<?xml version="1.0"?>

<instances>

<instance id="example">

<listeners>

<listener protocol="sqlrclient" addresses="192.168.1.50,192.168.1.51"/>

</listeners>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

When configured this way, it can be accessed on 192.168.1.50 and 192.168.1.51 but not on 127.0.0.1 (localhost).

If the socket option is specified but port and addresses options are not, then SQL Relay will only listen on the socket. If addresses/port and socket options are both specified then it listens on both.

The listeners tag also supports a debug attribute. If debug="yes" is configured in the listeners tag (not in an individual listener tag) then debugging information about the listener is written to the destination of the debug logger module (if one is defined, see the debug logger module), or otherwise, to standard output. If debug="no" is configured, or if the debug attribute is omitted, then no debugging information is written.

Multiple Instances

Any number of SQL Relay instances can be defined in the configuration file.

In following example, instances that connect to Oracle, SAP/Sybase and DB2 are defined in the same file.

<?xml version="1.0"?>

<instances>

<instance id="oracleexample">

<listeners>

<listener port="9000"/>

</listeners>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

<instance id="sapexample" dbase="sap">

<listeners>

<listener port="9001"/>

</listeners>

<connections>

<connection string="sybase=/opt/sap;lang=en_US;server=SAPSERVER;user=sapuser;password=sappassword;db=sapdb"/>

</connections>

</instance>

<instance id="db2example" dbase="db2">

<listeners>

<listener port="9002"/>

</listeners>

<connections>

<connection string="db=db2db;user=db2inst1;password=db2inst1pass;lang=C;connecttimeout=0"/>

</connections>

</instance>

</instances>

These instances can be started using:

sqlr-start -id oracleexample sqlr-start -id sapexample sqlr-start -id db2example

( NOTE: When installed from packages, SQL Relay may have to be started and stopped as root.)

...and accessed using:

sqlrsh -host sqlrserver -port 9000 -user scott -password tiger sqlrsh -host sqlrserver -port 9001 -user sapuser -password sappassword sqlrsh -host sqlrserver -port 9002 -user db2inst1 -password db2inst1pass

Starting Instances Automatically

In all previous examples sqlr-start has been called with the -id option, specifying which instance to start. If sqlr-start is called without the -id option then it will start all instances configured with the enabled attribute set to yes.

For example, if the following instances are defined...

<?xml version="1.0"?>

<instances>

<instance id="oracleexample" enabled="yes">

<listeners>

<listener port="9000"/>

</listeners>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

<instance id="sapexample" enabled="yes" dbase="sap">

<listeners>

<listener port="9001"/>

</listeners>

<connections>

<connection string="sybase=/opt/sap;lang=en_US;server=SAPSERVER;user=sapuser;password=sappassword;db=sapdb"/>

</connections>

</instance>

<instance id="db2example" dbase="db2">

<listeners>

<listener port="9002"/>

</listeners>

<connections>

<connection string="db=db2db;user=db2inst1;password=db2inst1pass;lang=C;connecttimeout=0"/>

</connections>

</instance>

</instances>

...then calling sqlr-start without the -id parameter will start oracleexample and sapexample because enabled="yes" is configured for those instances. db2example will not be started because enabled="yes" is not configured for that instance.

When installed on most platforms, SQL Relay creates a systemd service file (usually in /usr/lib/systemd/system or /lib/systemd/system) or an init script in the appropriate place under /etc. These call sqlr-start with no -id option. If configured to run at boot, they will start all instances for which enabled="yes" is configured.

How to enable the service file or init script depends on what platform you are using.

On systemd-enabled Linux, this usually involves running:

systemctl enable sqlrelay.service

On non-systemd-enabled Linux, Solaris, SCO and other non-BSD Unixes, this usually involves creating a symlink from /etc/init.d/sqlrelay to /etc/rc3.d/S85sqlrelay. This can be done manually, but most platforms provide utilities to do it for you.

Redhat-derived Linux distributions have a chkconfig command that can do this for you:

chkconfig --add sqlrelay

Debian-derived Linux distributions provide the update-rc.d command:

update-rc.d sqlrelay defaults

Solaris provides svcadm:

svcadm enable sqlrelay

On FreeBSD you must add a line to /etc/rc.conf like:

sqlrelay_enabled=YES

On NetBSD you must add a line to /etc/rc.conf like:

sqlrelay=YES

On OpenBSD you must add a line to /etc/rc.conf like:

sqlrelay_flags=""

Client Protocol Options

Whether written using the native SQL Relay API, or a connector of some sort, SQL Relay apps generally communicate with SQL Relay using SQLRClient, the native SQL Relay client-server protocol.

However, SQL Relay also supports the MySQL and PostgreSQL client-server protocols. When SQL Relay is configured to use one of these, it allows MySQL/MariaDB and PostgreSQL apps to communicate directly with SQL Relay without modification, without having to install any software on the client.

In these configurations, SQL Relay becomes a transparent proxy. MySQL/MariaDB or PostgreSQL apps aimed at SQL Relay still think that they're talking to a MySQL/MariaDB or PostgreSQL database, but in fact, are talking to SQL Relay.

SQLRClient Protocol

SQLRClient is the native SQL Relay protocol, enabled by default. The example configurations above and throughout most of the rest of the guide configure SQL Relay to speak this protocol.

If an instance speaks the SQLRClient client-server protocol, then any client that wishes to use it must also speak the SQLRClient client-server protocol. This means that most software written using the SQL Relay native API, or written using a database abstraction layer which loads a driver for SQL Relay can access this instance. However, it also means that client programs for other databases (eg. the mysql, psql, and sqlplus command line programs, MySQL Workbench, Oracle SQL Developer, SQL Server Management Studio, etc.) cannot access this instance.

Basic Configuration

SQLRClient is the native SQL Relay protocol, no special tags or attributes are required to enable it.

<?xml version="1.0"?>

<instances>

<instance id="example">

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

In this configuration:

- The connection tag instructs the instance to log in to the ora1 database as user scott with password tiger.

- By default, SQL Relay loads the sqlrclient protocol module, and listens on port 9000.

The instance can be started using:

sqlr-start -id example

and accessed from the local machine using:

sqlrsh -host localhost -user scott -password tiger

( NOTE: By default, the user and password used to access SQL Relay are the same as the user and password that SQL Relay is configured to use to access the database. That is, they are the values of the user and password parameters of the string attribute of the connection tag. )

By default, SQL Relay listens on all available network interfaces, on port 9000, and can be accessed remotely by hostname. For example, if the server running SQL Relay is named sqlrserver then it can be accessed from another system using:

sqlrsh -host sqlrserver -user scott -password tiger

The instance can be stopped using:

sqlr-stop -id example

Though it is not necessary, it is possible to explicitly configure SQL Relay to load the sqlrclient protocol module as follows:

<?xml version="1.0"?>

<instances>

<instance id="example">

<listeners>

<listener protocol="sqlrclient"/>

</listener>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

Since this instance speaks the SQLRClient client-server protocol, any client that wishes to use it must also speak the SQLRClient client-server protocol. This means that software written using the SQL Relay native API, or written using a database abstraction layer which loads a driver for SQL Relay can access this instance. However, it also means that client programs for other databases (eg. the mysql, psql, and sqlplus command line programs, MySQL Workbench, Oracle SQL Developer, SQL Server Management Studio, etc.) cannot access this instance.

Listener Options

By default, SQL Relay listens on port 9000.

However, it can be configured to listen on a different port.

<?xml version="1.0"?>

<instances>

<instance id="example">

<listeners>

<listener protocol="sqlrclient" port="9001"/>

</listeners>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

If the server has multiple network interfaces, SQL Relay can also be configured to listen on specific IP addresses.

<?xml version="1.0"?>

<instances>

<instance id="example">

<listeners>

<listener protocol="sqlrclient" addresses="192.168.1.50,192.168.1.51"/>

</listeners>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

In the example above, it can be accessed on 192.168.1.50 and 192.168.1.51 but not on 127.0.0.1 (localhost).

It can also be configured to listen on a unix socket by adding a socket attribute to the listener tag.

<?xml version="1.0"?>

<instances>

<instance id="example">

<listeners>

<listener protocol="sqlrclient" socket="/tmp/example.socket">

</listener>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

( NOTE: The user that SQL Relay runs as must be able to read and write to the path of the socket.)

If the socket option is specified but the port option is not, then SQL Relay will only listen on the socket. If port and socket options are both specified then it listens on both.

The SQLRClient protocol module also has a debug attribute. If debug="yes" is configured in the individual listener tag (not in the listeners tag), then debugging information about the protocol is written to the destination of the debug logger module (if one is defined, see the debug logger module), or otherwise, to standard output. If debug="no" is configured, or if the debug attribute is omitted, then no debugging information is written.

Authentication/Encryption Options

When using the SQLRClient protocol, there are several options for controlling which users are allowed to access an instance of SQL Relay.

Connect Strings Auth

The default authentication option is connect strings auth.

When connect strings authentication is used, a user is authenticated against the set of user/password combinations that SQL Relay is configured to use to access the database. That is, they are the values of the user and password parameters of the string attribute of the connection tag.

In the following example, SQL Relay is configured to access the database using scott/tiger credentials.

<?xml version="1.0"?>

<instances>

<instance id="example">

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

In this case, clients would also use scott/tiger credentials to access SQL Relay.

Though it is not necessary, it is possible to explicitly configure SQL Relay to load the connect strings auth module as follows:

<?xml version="1.0"?>

<instances>

<instance id="example">

<auths>

<auth module="sqlrelay_connectstrings"/>

</auths>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

User List Auth

Another popular authentication option is user list auth.

When user list authentication is used, a user is authenticated against a static list of user/password combinations, which may be different than the user/password used that SQL Relay uses to access the database.

To enable user list auth, you must provide a list of user/password combinations, as follows:

<?xml version="1.0"?>

<instances>

<instance id="example">

<auths>

<auth module="sqlrclient_userlist">

<user user="oneuser" password="onepassword"/>

<user user="anotheruser" password="anotherpassword"/>

<user user="yetanotheruser" password="yetanotherpassword"/>

</auth>

</auths>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

Database Auth

Another popular authentication option is database auth.

The sqlrclient_database module can be used to authentication users directly against the database itself. This is useful when you want the credentials used to access SQL Relay to be the same as the credentials used to access the database, but it's inconvenient to maintain a duplicate list of users in the configuration file.

SQL Relay authenticates users against the database by checking the provided credentials against the credentials that are currently in use, and then, if they differ, logging out of the database and back in using the provided credentials.

To enable database auth, configure SQL Relay to load the sqlrclient_database auth module as follows.

<?xml version="1.0"?>

<instances>

<instance id="example">

<auths>

<auth module="sqlrclient_database"/>

</auths>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

( NOTE: Database authentication should not be used in an instance where dbase="router". It's OK for the instances that the router routes to to use it, but the router instance itself should not. If database authentication is used for that instance, then authentication will fail.)

In this configuration, SQL Relay initially logs in to the database as scott/tiger as specified in the connection tag. But each time a client connects, SQL Relay logs out and attempts to log back in as the user specified by the client, unless the user/password are the same as the current user.

For example, if a client initially connects using:

sqlrsh -host localhost -user scott -password tiger

...then SQL Relay doesn't need to log out and log back in.

But if the client then tries to connect using:

sqlrsh -host localhost -user anotheruser -password anotherpassword

..then SQL Relay does need to log out and log back in.

A subsequent connection using anotheruser/anotherpassword won't require a re-login, but a subsequent connection using any other user/password will.

Though this module is convenient, it has the disadvantage that logging in and out of the database over and over partially defeats SQL Relay's persistent connection pooling.

Proxied Auth

Another authentication option is proxied auth.

When proxied authentication is used, a user is authenticated against the database itself, though in a different manner than database authentication described above. SQL Relay logs into the database as a user with permissions to proxy other users. For each client session, SQL Relay checks the provided credentials against the credentials that are currently in use. If they differ, then it asks the proxy user to switch the user it's proxying to the provided user.

This is currently only supported with Oracle (version 8i or higher) and requires database configuration. See this document for more information including instructions for configuring Oracle.

To enable proxied auth, configure SQL Relay to load the proxied auth module as follows.

<?xml version="1.0"?>

<instances>

<instance id="example">

<auths>

<auth module="sqlrclient_proxied"/>

</auths>

<connections>

<connection string="user=scott;password=tiger;oracle_sid=ora1"/>

</connections>

</instance>

</instances>

( NOTE: Proxied authentication should not be used in an instance where dbase="router". It's OK for the instances that the router routes to to use it, but the router instance itself should not. If proxied authentication is used for that instance, then authentication will fail.)

Kerberos and Active Directory Encryption and Authentication

SQL Relay supports Kerberos encryption and authentication between the SQL Relay client and SQL Relay Server.

When Kerberos encryption and authentication is used:

- All communications between the SQL Relay client and SQL Relay server are encrypted.

- A user who has authenticated against a Kerberos KDC or Active Directory Domain Controller can access SQL Relay without having to provide additional credentials.

On Linux and Unix systems, both server and client environments must be "Kerberized". On Windows systems, both server and client must join an Active Directory Domain. Note that this is only available on Professional or Server versions of Windows. Home versions cannot join Active Directory Domains.

The following configuration configures an instance of SQL Relay to use Kerberos authentication and encryption:

<?xml version="1.0"?> <instances> <instance id="example"> <listeners> <listener krb="yes" krbservice="sqlrelay" krbkeytab="/usr/local/firstworks/etc/sqlrelay.keytab"/> </listener> <auths> <auth module="sqlrclient_userlist"> <user user="dmuse@KRB.FIRSTWORKS.COM"/> <user user="kmuse@KRB.FIRSTWORKS.COM"/> <user user="imuse@KRB.FIRSTWORKS.COM"/> <user user="smuse@KRB.FIRSTWORKS.COM"/> <user user="FIRSTWORKS.COM\dmuse"/> <user user="FIRSTWORKS.COM\kmuse"/> <user user="FIRSTWORKS.COM\imuse"/> <user user="FIRSTWORKS.COM\smuse"/> </auth> </auths> <connections> <connection string="user=scott;password=tiger;oracle_sid=ora1"/> </connections> </instance> </instances>

- The krb attribute enables (or disables) Kerberos authentication and encryption.

- The krbservice attribute specifies which Kerberos service to use. This attribute is optional and defaults to "sqlrelay". It is only shown here for illustrative purposes.

- The krbkeytab attribute specifies the location of the keytab file containing the key for the specified Kerberos service. This attribute is not required on Windows. On Linux or Unix systems if this paramter is omitted, then it defaults to the system keytab, usually /etc/krb5.keytab

- User list authentication is also used. Other authentication methods are not currently supported with Kerberos.

Note that no passwords are required in the user list. Note also that users are specified in both user@REALM (Kerberos) format and REALM\user (Active Directory) format to support users authenticated against both systems.

To start the instance on a Linux or Unix system, you must be logged in as a user that can read the file specified by the krbkeytab attribute.

To start the instance on a Windows system, you must be logged in as a user that can proxy the service specified by the krbservice attribute (or it's default value of "sqlrelay" if omitted).

If those criteria are met, starting the Kerberized instance of SQL Relay is the same as starting any other instance:

sqlr-start -id example

( NOTE: When installed from packages, SQL Relay may have to be started and stopped as root.)

To access the instance, you must acquire a Kerberos ticket-granting ticket. On a Linux or Unix system, this typically involves running kinit, though a fully Kerberized environment may acquire this during login. On a Windows system, you must log in as an Active Directory domain user.

After acquiring the ticket-granting ticket, the instance of SQL Relay may be accessed from a Linux or Unix system as follows:

sqlrsh -host sqlrserver -krb

From a Windows system, it may be necessary to specify the fully qualified Kerberos service name as well:

sqlrsh -host sqlrserver -krb -krbservice sqlrelay/sqlrserver.firstworks.com@AD.FIRSTWORKS.COM

Note the absence of user and password parameters.

Kerberos authentication establishes trust between the user who acquired the ticket-granting ticket (the user running the client program) and the service (the SQL Relay server) as follows:

- The client program uses the user's ticket-granting ticket to acquire a ticket for the sqlrelay service.

- The client program then uses this service ticket to establish a security context with the SQL Relay server.

- During this process the client program sends the SQL Relay server the user name that was used to acquire the original ticket-granting ticket.

- If the security context can be successfully established, then the SQL Relay server can trust that the client program is being run by the user that it says it is.

Once the SQL Relay server trusts that the client is being run by the user that it says it is, the user is authenticated against the list of users.

While Kerberos authenticated and encrypted sessions are substantially more secure than standard SQL Relay sessions, several factors contribute to a performance penalty:

- The client program may have to acquire a service ticket from another server (the Kerberos KDC or Active Directory Domain Controller) prior to connecting to the SQL Relay server.

- When establishing the secure session, a significant amount of data must be sent back and forth between the client and server over multiple network round-trips.

- Kerberos imposes a limit on the amount of data that can be sent or received at once, so more round trips may be required when processing queries.

- Without dedicated encryption hardware and a Kerberos implementation that supports it, the computation involved in encrypting and decrypting data can also introduce delays.

Any kind of full session encryption should be used with caution in performance-sensitive applications.

TLS/SSL Encryption and Authentication

SQL Relay supports TLS/SSL encryption and authentication between the SQL Relay client and SQL Relay Server.

When TLS/SSL encryption and authentication is used:

- All communications between the SQL Relay client and SQL Relay server are encrypted.

- SQL Relay clients and servers may optionally validate each other's certificates and identities.

When using TLS/SSL encryption and authentication, at minimum, the SQL Relay server must be configured to load a certificate. For highly secure production environments, this certificate should come from a trusted certificate authority. In other environments the certificate may be self-signed, or even be borrowed from another server.

Encryption Only

The following configuration enables TLS/SSL encryption for an instance of SQL Relay:

<?xml version="1.0"?> <instances> <instance id="example"> <listeners> <listener tls="yes" tlscert="/usr/local/firstworks/etc/sqlrserver.pem"/> </listeners> <connections> <connection string="user=scott;password=tiger;oracle_sid=ora1"/> </connections> </instance> </instances>

- The tls attribute enables (or disables) TLS/SSL encryption.

- The tlscert attribute specifies the TLS/SSL certificate chain to use.

- (a .pem file is specified in this example, but on Windows systems, a .pfx file or Windows Certificate Store reference must be used. See The tlscert Parameter for details.)

- User list authentication is also used. Other authentication methods are not currently supported with TLS/SSL.

To start the instance on a Linux or Unix system, you must be logged in as a user that can read the file specified by the tlscert attribute. If that criterium is met then the instance can be started using:

sqlr-start -id example

( NOTE: When installed from packages, SQL Relay may have to be started and stopped as root.)

The instance may be accessed as follows:

sqlrsh -host sqlrserver -user scott -password tiger -tls -tlsvalidate no

This establishes a TLS/SSL-encrypted session but does not validate the server's certificate or identity. The session will only continue if the server's certificate is is well-formed and hasn't expired, but the client is still vulnerable to various attacks.

Certificate Validation

For a more secure session, the client may validate that the server's certificate was signed by a trusted certificate authority, known to the system, as follows:

sqlrsh -host sqlrserver -user scott -password tiger -tls -tlsvalidate ca

If the server's certificate is self-signed, then the certificate authority won't be known to the system, but it's certificate may be specified by the tlsca parameter as follows:

sqlrsh -host sqlrserver -user scott -password tiger -tls -tlsvalidate ca -tlsca /usr/local/firstworks/etc/myca.pem

(.pem files are specified in this example, but on Windows systems, .pfx file or Windows Certificate Store references must be used. See The tlsca Parameter for details.)

This establishes a TLS/SSL-encrypted session with the server and validates the server's certificate, but does not validate the server's identity. The session will only continue if the server's certificate is valid, but the client is still vulnerable to various attacks.

Host Name Validation

For a more secure session, the client may validate that the host name provided by the server's certificate matches the host name that the client meant to connect to, as follows:

sqlrsh -host sqlrserver.firstworks.com -user scott -password tiger -tls -tlsvalidate ca+host -tlsca /usr/local/firstworks/etc/myca.pem

(.pem files are specified in this example, but on Windows systems, .pfx file or Windows Certificate Store references must be used. See The tlsca Parameter for details.)

Note that the fully qualified host name was provided. Note also the use of the ca+host value for the tlsvalidate parameter. With these parameters, in addition to validating that the server's certificate was signed by a trusted certificate authority, the host name will also be validated. If the certificate contains Subject Alternative Names, then the host name will be compared to each of them. If no Subject Alternative Names are provided then the host name will be compared to the certificate's Common Name. The session will only continue if the sever's certificate and identity are both valid.

Domain Name Validation

Unless self-signed, certificates can be expensive, so certificates are often shared by multiple servers across a domain. To manage environments like this, the host name validation can be relaxed as follows, to just domain name validation:

sqlrsh -host sqlrserver.firstworks.com -user scott -password tiger -tls -tlsvalidate ca+domain -tlsca /usr/local/firstworks/etc/myca.pem

(.pem files are specified in this example, but on Windows systems, .pfx file or Windows Certificate Store references must be used. See The tlsca Parameter for details.)

Note that the fully qualified host name was provided. Note also the use of the ca+domain value for the tlsvalidate parameter. With these parameters, in addition to validating that the server's certificate was signed by a trusted certificate authority, the domain name portion of the host name will also be validated. If the certificate contains Subject Alternative Names, then the domain name portion of the host name will be compared to the domain name portion of each of them. If no Subject Alternative Names are provided then the domain name portion of the host name will be compared to the domain name portion of the certificate's Common Name. The session will only continue if the sever's certificate and domain identity are both valid.

Mutual Authentication

For an even more secure session, the server may also request a certificate from the client, validate the certificate, and optionally authenticate the client based on the host name provided by the certificate.

The following configuration enables these checks for an instance of SQL Relay:

<?xml version="1.0"?> <instances> <instance id="example"> <listeners> <listener tls="yes" tlscert="/usr/local/firstworks/etc/sqlrserver.pem" tlsvalidate="yes" tlsca="/usr/local/firstworks/etc/myca.pem"/> </listeners> <auths> <auth module="sqlrclient_userlist"> <user user="sqlrclient1.firstworks.com"/> <user user="sqlrclient2.firstworks.com"/> <user user="sqlrclient3.firstworks.com"/> <user user="sqlrclient4.firstworks.com"/> </auth> </auths> <connections> <connection string="user=scott;password=tiger;oracle_sid=ora1"/> </connections> </instance> </instances>

- The tlsvalidate attribute enables (or disables) validation that client's certificate was signed by a trusted certificate authority, known to the system, or as provided by the tlsca attribute.

- The tlsca attribute specifies a certificate authority to include when validating the client's certificate. This is useful when validating self-signed certificates.

- (a .pem file is specified in this example, but on Windows systems, a .pfx file or Windows Certificate Store reference must be used. See The tlsca Parameter for details.)

- User list authentication is also used.

Note that no passwords are required in the user list. In this configuration, the Subject Alternative Names in the client's certificate (or Common Name if no SAN's are present) are authenticate against the list of valid names.

Note also that when tlsvalidate is set to "yes", database and proxied authentication cannot currently be used. This is because database and proxied authentication both require a user name and password but the client certificate doesn't provide either.

To access the instance, the client must provide, at minimum, a certificate chain file (containing the client's certificate, private key, and signing certificates, as appropriate), as follows:

sqlrsh -host sqlrserver -tls -tlsvalidate no -tlscert /usr/local/firstworks/etc/sqlrclient.pem

(.pem files are specified in this example, but on Windows systems, .pfx file or Windows Certificate Store references must be used. See The tlscert Parameter for details.)

Note the absence of user and password parameters. Rather than passing a user and password, the client passes the specified certificate to the server. The server trusts that the client is who they say they are by virtue of having a valid certificate and the name provided by the certificate is authenticated against the list of valid names.

In a more likely use case though, mutual authentication occurs - the client validates the server's certificate and the server validates the client's certificate, as follows:

sqlrsh -host sqlrserver.firstworks.com -tls -tlscert /usr/local/firstworks/etc/sqlrclient.pem -tlsvalidate ca+host -tlsca /usr/local/firstworks/etc/myca.pem

(.pem files are specified in this example, but on Windows systems, .pfx file or Windows Certificate Store references must be used. See The tlscert Parameter and The tlsca Parameter for details.)

In this example, the client provides a certificate for the server to validate, validates the host's certificate against the provided certificate authority, and validates the host's identity against the provided host name.

Performance Considerations

While TLS/SSL authenticated and encrypted sessions are substantially more secure than standard SQL Relay sessions, several factors contribute to a performance penalty:

- When establishing the secure session, a significant amount of data must be sent back and forth between the client and server over multiple network round-trips.

- Some TLS/SSL implementations impose a limit on the amount of data that can be sent or received at once, so more round trips may be required when processing queries.

- Without dedicated encryption hardware and a TLS/SSL implementation that supports it, the computation involved in encrypting and decrypting data can also introduce delays.

Any kind of full session encryption should be used with caution in performance-sensitive applications.

MySQL Protocol

SQL Relay can be configured to speak the native MySQL protocol, enabling client programs for MySQL/MariaDB (eg. the mysql command line program and MySQL Workbench) to access SQL Relay directly.

If an instance speaks the MySQL client-server protocol, then any client that wishes to use it must also speak the MySQL client-server protocol. This means that most software written using the MySQL API, or written using a database abstraction layer which loads a driver for MySQL can access this instance. However, it also means that SQL Relay's standard sqlrsh client program and client programs for other databases (eg. the psql, and sqlplus command line programs, Oracle SQL Developer, SQL Server Management Studio, etc.) cannot access this instance.

Basic Configuration

To enable SQL Relay to speak the MySQL protocol, add the appropriate listener tag:

<?xml version="1.0"?> <instances> <instance id="example" dbase="mysql"> <listeners> <listener protocol="mysql" port="3307"/> </listeners> <connections> <connection string="user=mysqluser;password=mysqlpassword;db=mysqldb;host=mysqlhost"/> </connections> </instance> </instances>

In this configuration:

- The connection tag instructs the instance to log in to the mysqldb database on host mysqlhost as user mysqluser with password mysqlpassword.

- The listener tag instructs SQL Relay to load the mysql protocol module, and listen on port 3307.

The instance can be started using:

sqlr-start -id example

and accessed from the local machine using:

mysql --host=127.0.0.1 --port=3307 --user=sqlruser --password=sqlrpassword

( NOTE: 127.0.0.1 is used instead of "localhost" because "localhost" instructs the mysql client to use a predefined unix socket.)

( NOTE: By default, the user and password used to access SQL Relay are the same as the user and password that SQL Relay is configured to use to access the database. That is, they are the values of the user and password parameters of the string attribute of the connection tag. )

By default, SQL Relay listens on all available network interfaces, and can be accessed remotely by hostname. For example, if the server running SQL Relay is named sqlrserver then it can be accessed from another system using:

mysql --host=sqlrserver --port=3307 --user=sqlruser --password=sqlrpassword

The instance can be stopped using:

sqlr-stop -id example